Unlocking the Power of Vector Databases: Transforming Data Search and Discovery in the Age of Generative AI

The new wave of Artificial Intelligence (AI) pushed by the latest advances in the Generative AI area is rapidly transforming our world, revolutionizing the way we live, work, and interact. From chatbots and self-driving cars to social media algorithms or applications creating new content, including audio, images, and text – artificial intelligence has become an integral part of our lives. With the ability to analyze vast amounts of data, recognize patterns, and make predictions with extreme accuracy, endless possibilities have opened up for it across various sectors, including healthcare, finance, transportation, education, and entertainment.

Artificial intelligence is transforming every industry – but in doing so, it is also introducing further challenges that require modern solutions. Traditional databases and search methods have served us well over the years, but as the complexity and volume of data continue to grow exponentially, they are facing some limitations – the need to efficiently store, query, and process complex types of data (mainly unstructured!) has become more crucial than ever. Also, when it comes to Generative AI, the context of the data plays an extremely important role – traditional databases and search algorithms have difficulty capturing intricate relationships within complex datasets. For instance, in e-commerce, understanding the preferences of customers based on their browsing history, purchase behavior, and social interactions requires a sophisticated approach that goes beyond simple keyword matching.

Another challenge is the need for artificial intelligence models to be up-to-date in order to make accurate predictions – current models are trained on data that becomes outdated over time. For example, ChatGPT is using one of today’s most advanced Large Language Models, which was trained on data until late 2021, so it has limited knowledge of current events. Fortunately, there is a solution: You can BYOD (“Bring Your Own Data”) to provide these models with the specific information they need to answer your questions. But how do you figure out what data to provide when your dataset is large?

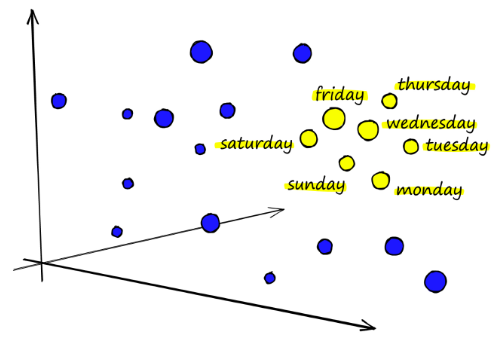

That’s where vector databases (also known as vector search engines) come into play. They are specialized data storage systems designed to handle and process multidimensional and context-rich data efficiently. In the context of AI and machine learning, we talk about vector embeddings – numerical representations of words, phrases, documents, images, or any other data type, capturing their semantic meaning and relationships, stored in high dimensional and dense vectors. Embeddings carry information that is crucial for the AI to maintain long-term memory, as well as, gaining an understanding of underlying structures, patterns, and relationships. They can be generated by AI models – BERT, Word2Vec, GloVe, or GPT for text data; convolutional neural networks (CNNs) for images; image embedding transformations over the sound spectrogram – for audio.

Vector databases offer several advantages over traditional databases, making them a better choice for certain applications. Here are some reasons why vector databases are considered better:

- Support for different data types – various data types (text, images, audio, video etc.) can be converted into vector embeddings, allowing for unified representation and analysis of diverse data in a vectorized form;

- Higher performance – vector databases are optimized to handle high-dimensional data, enabling faster query processing. They can efficiently perform complex mathematical operations like vector comparisons, aggregations, and searches, outperforming traditional databases in these tasks;

- Efficient storage – vector databases use vector compression techniques, so they can efficiently store and retrieve high-dimensional vector representations while minimizing storage requirements and maximizing query performance;

- Contextual and semantic search – vector databases capture the semantic meaning and contextual relationships between embeddings, enabling advanced search capabilities. This makes them well-suited for applications, where understanding the context and relationships between data is crucial;

- Support for different similarity metrics – different similarity metrics for approximate nearest neighbor (ANN) algorithms may suit different use cases and data types, allowing users to choose the most appropriate metric based on their specific requirements and the nature of their data;

- Scalability and distributed processing – scalability ensures that AI models can process vast amounts of data in real time, even as the database grows;

- Metadata storage and filtering – vector databases can index additional information associated with each vector entry, enabling an additional level of filtering;

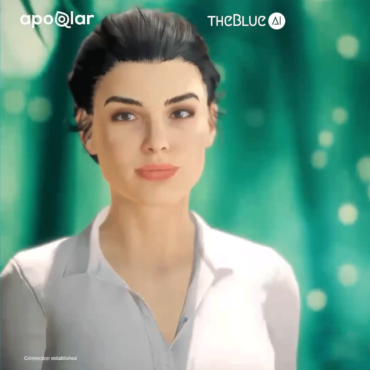

- Real-time updates – with the ability to update in real-time, vector databases ensure that AI models have access to the most current information. This feature is particularly useful for applications such as chatbots, virtual assistants, and recommendation systems that require up-to-date data for accurate responses;

- Integration with AI Frameworks – vector databases often provides libraries and integrations with popular AI frameworks, simplifying the integration of vector data into AI models. These integrations make it easier to maintain data, reducing development time and effort.

One of the main benefits of vector databases is their advanced search capabilities. Unlike traditional databases, which rely on exact matches of keywords or word frequencies, vector databases use approximate nearing neighbor (ANN) algorithms and various similarity metrics to understand the meaning and relationships between data. This means they can find similar items or provide relevant suggestions based on the context of the query by finding the nearest neighbors to the given (vectorized) query. This makes searching more accurate and helps discover relevant information, even if there are no exact matches for keywords. Overall, vector databases make searching smarter, more efficient, and best of all, context-aware, leading to better results and a more satisfying user experience.

The use of vector search engines not only enables advanced search capabilities but also creates opportunities for new applications and developments. Here are some examples of what can be built with vector search engines and embeddings:

- Recommendation systems – storing user preferences as vectors and then finding similar items or users in embedding space. Recommendations are often used in e-commerce or by streaming platforms (Amazon, eBay, Netflix, YouTube, or Spotify);

- Similarity/semantic search – since the meaning and context of the data are captured in the embedding, vector search finds what users mean without requiring exact keyword matching. It works with text data (e.g., Google Search, Yahoo), images (e.g., Google Reverse Image Search), or audio (e.g., Shazam);

- Question answering systems – mapping knowledge base of documents and current questions as vectors and retrieving relevant closest match based on vector similarity as an answer. This is useful in chatbots, virtual assistants, and customer support applications;

- Fraud detection/outlier detection – modeling user behavior patterns as vectors and identifying anomalies or suspicious activities based on similarity metrics. This helps in detecting fraudulent transactions or activities;

- Personalized searches/User Profiling – capturing user preferences and storing them as vectors. This leads to more relevant search results and improved user satisfaction;

- Data Analytics – vector databases support advanced data analytics tasks, including clustering and classification, pattern recognition, sentiment analysis, document similarity, and more;

- Time Series Analysis – vector databases can efficiently store and analyze time series data, such as sensor readings or financial data. By representing time series as vectors, it becomes possible to perform similarity-based searches, detect anomalies, or identify patterns and trends over time;

- Social Media Analysis – analyzing relationships and connections of individuals. This is beneficial for identifying communities, and influencers, and detecting patterns in social networks;

- Other – including data preprocessing/browsing of unstructured data, re-ranking search results, one-shot/zero-shot learning, typo detection (fuzzy matching), detecting when machine learning models go stale (drift), medical diagnosis, etc.

With the ability to handle high-dimensional data and support advanced similarity-based operations, vector databases provide valuable solutions in a wide range of domains and industries.

Selecting the appropriate vector database depends on various factors, including size, complexity, and granularity of the data, as well as other specific requirements, such as costs, existing Machine Learning Operations (MLOps), hosting, performance, and ease of configuration. All the databases offer an efficient way to store and process large amounts of data, but their approaches may vary. Each database may have its own unique methodology and techniques for handling vector data. It is important to explore and understand the various approaches used by different vector databases to determine which one best fits specific project needs and preferences.

Here are some most popular vector databases:

- Weaviate (Managed / Self-hosted vector database / Open source) – vector database that stores both objects and vectors. This allows for combination of vector search and filtering. Weaviate can be used stand-alone (aka bring your vectors) or with a variety of modules that can do the vectorization for you;

- Milvus (Self-hosted vector database / Open source) – vector database built for scalable similarity search. Feature-rich, highly scalable, and blazing fast. With Milvus vector database, you can create a large-scale similarity search service in less than a minute;

- Pinecone (Managed vector database / Close source) – vector database that makes it easy to connect your company data with generative AI models for fast, accurate, reliable applications without the need to manage a complex infrastructure. It is developer-friendly, fully managed, and easy to scale. Unfortunately, Pinecone does not have built-in integration with embedding models;

- Vespa (Managed / Self-hosted vector database) – fully featured search engine and vector database. It supports vector search (ANN), lexical search, and search in structured data, all in the same query. Integrated machine-learned model inference allows you to apply AI to make sense of your data in real-time;

- Qdrant (Open Source) – vector similarity search engine and vector database. It provides a production-ready service with a convenient API to store, search, and manage points – vectors with an additional payload. Qdrant is tailored to extended filtering support. It makes it useful for all sorts of neural-network or semantic-based matching, faceted search, and other applications;

- Chroma (Open Source) – is an open-source embedding database. Chroma makes it easy to build Large Language Model apps by making knowledge, facts, and skills pluggable for LLMs;

- Vald – It uses the fastest ANN Algorithm NGT to search neighbors. Vald has automatic vector indexing and index backup, and horizontal scaling which is made for searching from billions of feature vector data. Vald is easy to use, feature-rich, and highly customizable;

- Faiss (Facebook AI Similarity Search) – a library for efficient similarity search and clustering of dense vectors. It contains algorithms that search in sets of vectors of any size, up to ones that possibly do not fit in RAM;

- Elastisearch – open-source full-text search engine. In version 8.0, Elasticsearch added support for vector similarity search. Elasticsearch supports third-party text embedding models.

As the complexity and volume of data continue to grow exponentially, the traditional methods of data storage and search are no longer sufficient for certain applications. Vector databases provide a powerful alternative to traditional methods, enabling the discovery of valuable, context-aware patterns and relationships within complex datasets. By embracing the power of embeddings and similarity-based retrieval, organizations can unleash the full potential of their data assets. Whether it’s in recommendation systems, semantic search, or fraud detection, vector databases and vector search have become indispensable tools for modern data-driven enterprises. Leveraging the potential of artificial intelligence and machine learning will be critical to maintaining a competitive advantage in a data-centric world.

Would you like to build context-aware applications with vector search engines, but you don’t know where to start? Feel free to contact us!