What is Retrieval Augmented Generation (RAG), and why should you use it in your company?

In the age of big data and artificial intelligence, large language models (LLMs) are changing how we can analyze and interact with our data resources. They create new opportunities for more intelligent data processing techniques. They can help users analyze documents more quickly using complex questions, making knowledge acquisition and decision-making processes easier.

With businesses and organizations gathering loads of data and documents, it’s evident that we need better tools to manage, search, and effectively use all this information. Retrieval Augmented Generation (RAG) is a powerful approach to deliver new knowledge to models using augmented prompts. These solutions can enable employees to swiftly locate specific information without the laborious task of manually sifting through stacks of documents. Doesn’t that sound promising?

In this article, we’ll delve into the inner workings of the RAG system, unveiling its components, highlighting the main advantages it brings to the table, and acknowledging the potential challenges that may arise along the way.

What is Retrieval Augmented Generation (RAG)?

Retrieval Augmented Generation (RAG) is a method that enhances prompts by including context with relevant information from external data sources. This technique boosts the abilities of Large Language Models (LLMs) when it comes to working with custom data.

LLMs, such as the popular models from the GPT family, are very advanced tools. However, they have an important limitation. They lack specific knowledge about certain topics and mainly rely on what they’ve learned during their training, which is essentially a static set of knowledge.

This is where RAG comes into play. It combines techniques for retrieving information with text generation. In simple terms, it allows your AI to create content based on what it finds in a custom database or a collection of texts. The ultimate goal is to merge the power of pre-trained LLMs like GPT with fresh knowledge from text sources.

GPT is one of the leading models in the market. RAG takes it up a notch by providing GPT with the ability to absorb new knowledge from relevant documents.

Looking from the perspective of the LLM-based approaches so far, to enhance the model efficiency in a specific domain, fine-tuning was the way to go. Fine-tuning entails additional training using new data, which can be a time-consuming and costly process.

RAG offers a different approach. It enriches your prompts with extra context filled with relevant information. Think of it as a continuous knowledge boost. This dynamic approach allows models to access a constantly changing pool of data for more accurate responses. The best part? It reduces the need for frequent model retraining, saving both time and computational resources.

We can distinguish two main parts of the RAG approach:

– retrieval phase

– generation phase

Retrieval phase: In the retrieval phase, after receiving an input query, models turn to a text corpus or a database to find information or documents that may contain the answers needed.

Generation phase: In the generation phase, the system generates answers based on the retrieved text. During this step, the model combines the information it found with the foundational understanding it gained from its training data.

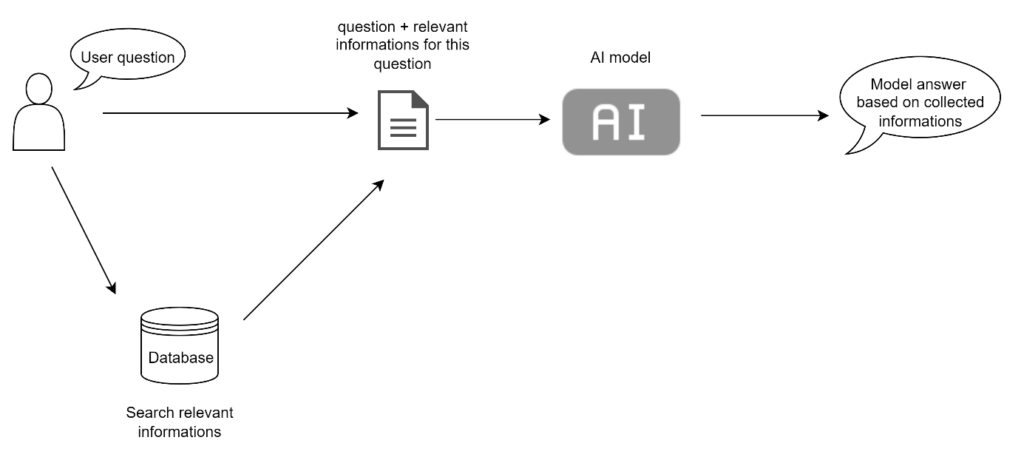

The diagram above shows how the RAG system works. It all begins with user questions, which are then processed to make searches efficient in the next step.

Next, the system looks for the most relevant information in a predefined collection of texts or a database, which might have the answers we’re looking for.

When the system searches for information, it goes beyond just matching keywords. It uses a smarter method called semantic search, which helps find related information better. Think of it like finding a book in a library using its topic rather than just its title. Tools like vector databases play a crucial role in making this happen. You can learn more about vector databases here.

The questions from users and the possible answers found during the search are combined to help the model come up with the final answer.

Advantages and challenges

One of the key benefits of RAG systems is their dynamic knowledge base. These systems give models access to a pool of knowledge that can be updated and expanded over time. RAG systems find practical applications in customer service, where they provide fast and accurate responses to user queries based on more recent information or knowledge in specific topics, as well as in content generation, such as creating custom reports.

There are some challenges involved in creating RAG systems. One challenge is ensuring that the data retrieved during the search process is relevant. Additionally, the retrieval step often requires adding extra components, like a vector database, to the system. While these components can be valuable, they may increase response times and require ongoing maintenance. Building efficient RAG systems demands expertise and experience in this field.

Conclusion

Retrieval Augmented Generation (RAG) systems introduce a novel approach to managing text information and developing AI systems that are more tailored and dynamic. They assist in expanding the knowledge within models by incorporating fresh context obtained from custom documents. This approach combines the efficiency of large language models like GPT with the ability to dynamically access custom data. RAG holds significant promise, not only enhancing the accuracy and relevance of custom data, but also opening up opportunities to create more robust systems.

If you’re interested in integrating the RAG system into your business operations, please don’t hesitate to reach out to us. We’re here to assist you.