Video Analytics - A novel approach

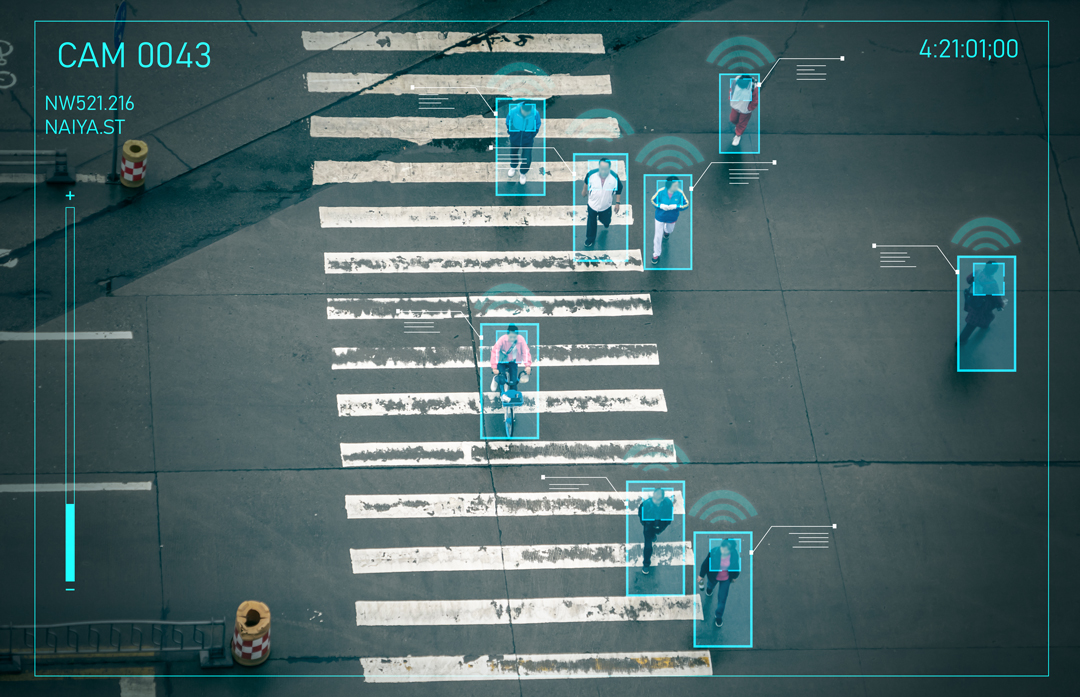

Imagine a world where cameras don’t just see but understand. A world where every second of a video is not just captured but analyzed and interpreted. This is the realm of video analytics, a field we are exploring with fascination. From identifying a simple hand gesture to the complex recognition of actions in real-time, video analysis is the key to a deeper understanding of our visually dominated world.

The field of video analytics encompasses a wide range of challenges and tasks. It might involve the relatively straightforward task of action classification, where a model infers the activity presented in a given video. Other, more complex tasks include temporal action detection, aiming to predict the timestamps of particular actions, and spatial action detection, which focuses on localizing all actions within the video and marking them with bounding boxes. Video analytics is more than a scientific endeavor; it serves multiple real-world purposes. For example, it can be used in cashierless stores, where clients can take whatever they need and then simply leave the store, as payment for the products is made automatically. Another application is automatic number-plate recognition, which can be used as a replacement for standard tickets in parking lots; by doing so, the overall process can be sped up.

Each of the mentioned tasks presents a different challenge, highlighting the vastness of the topic. This diversity shows its potential to serve a wide variety of purposes.

In this article, we will mainly consider action classification, temporal action detection, and spatial action detection as a whole when discussing video analytics. This focus will help us delve deeper into the intricacies and applications of these technologies in the expanding world of video analytics.

Revolutionary Approach in Video Analytics

With the introduction covered, we will now proceed to the main topic of our discussion. We would like to introduce you to a novel approach in video analytics that closely resembles other successful approaches in various areas of the deep learning landscape. This involves a foundational, self-supervised model that can be easily fine-tuned for numerous downstream tasks. Named VideoMAE v2, this model is among the first in the video analytics segment of AI to aim for a role similar to what the GPT family of models has achieved in the Natural Language Processing (NLP) field.

Before delving into a more in-depth analysis, let’s define what we mean by a “foundational” and “self-supervised” model:

Foundational Model: This term refers to models that can serve multiple purposes in a given domain without major changes or significant additional training. These models are often trained on vast amounts of data and achieve state-of-the-art results in many sub-domains, comparable to more specialized models designed for single purposes. An example is the ChatGPT model in the NLP field, which can perform both translation and sentiment analysis without being specifically trained for either task.

Self-Supervised Model: This refers to models that do not require labeled data for training. Instead, the model learns the general features of the presented data. This approach alleviates the burden of collecting an enormous amount of labeled data, saving both time and money. It can yield very good results, as acquiring unlabeled data is much easier and can therefore be collected in abundance.

Understanding the Model's Advanced Training Process

Let’s now delve into the workings of the model and understand how it achieves its state-of-the-art performance. Initially, the model undergoes self-supervised pre-training, where it uses a vast collection of unlabeled video clips from various sources to learn general video representations. These sources include YouTube and Instagram, as well as well-known public datasets for video analytics like the Kinetics and Something-Something datasets. The size and variety of the collected data are unprecedented in the video domain, with around 1.35 million video clips used from very diverse sources.

The pre-training process aims to learn the general features of the data at hand. For this purpose, the authors use the Masked Auto Encoding method in a video setup (hence the MAE in VideoMAE). This method involves first encoding the video using a Vision Transformer (ViT) backbone while employing a very high masking ratio of 90% – 95%. This means the majority of pixels are not visible to the model, presenting a more meaningful and challenging task. After the encoding process, the decoder part of the network attempts to reconstruct the video based on the output from the encoder. The network’s performance is measured by the Mean Squared Error (MSE) loss between the normalized masked pixels and the reconstructed pixels. Essentially, the network strives to recreate an encoded video as accurately as possible.

Following pre-training, the authors suggest that to unleash the model’s full potential and avoid overfitting to a specific dataset during fine-tuning, post-pre-training supervised fine-tuning should be conducted. This stage incorporates the semantics of various datasets into the pre-trained model. Both the post-pre-training stage and the actual fine-tuning on a specific dataset use only the encoder part of the network, the ViT backbone, without the decoder.

Finally, once the pre-training and post-pre-training stages are complete, we can fine-tune the model on the dataset of our choosing. Thanks to the earlier processes, the model now requires only minor adjustments to the network weights as it has already learned the general features present in the video data and more specific semantics of the task from the post-pre-training stage. This approach enables the model to deliver state-of-the-art results across various benchmarks with minimal additional fine-tuning on any specific dataset.

Key Takeaways from Video Analytics

As we’ve highlighted, the domain of video analytics encompasses a wide array of challenges and tasks. It initially lagged behind the fields of Natural Language Processing (NLP) and image analysis, mainly because it required a vast amount of data which was harder to acquire, and the overall training process was more complex compared to the image domain. However, with the advent of novel approaches, exemplified by VideoMAE v2, and the expansion of datasets, the field is rapidly advancing. The results are increasingly promising with each new model, signifying a significant leap forward in video analytics capabilities.

Leverage advanced video analytics through customized AI solutions from theBlue.ai

At theBlue.ai, we seamlessly integrate our in-depth AI expertise into the development of advanced solutions for video analysis. We specialize in creating customized, AI-based video analysis systems that are precisely tailored to your projects and business objectives. Contact us for an effective and goal-oriented transformation of your ideas into practical applications.