The Power of Effective Prompt Engineering in AI Development

In today’s rapidly changing technological landscape, artificial intelligence (AI) is playing an increasingly significant role in every aspect of our lives. From healthcare to finance, AI is transforming industries by automating tasks, predicting outcomes, and providing insights that were once unimaginable. However, even the most advanced AI models require a human touch to truly unlock their potential. That’s where prompt engineering comes in.

Prompt engineering is the art of crafting natural language prompts that enable AI models, specifically the large language models (LLMs) to perform chosen tasks. Simply put, prompts are phrases or sentences that are used to input data into AI models, instructing them to perform a particular task. A prompt can be used to generate text, classify images, or explain code.

For example, in natural language processing (NLP), a prompt can be used to guide a language model (like GPT-3 and ChatGPT) to generate text that matches a certain style or tone. A prompt can also be used to generate a continuation of a given text, such as completing a sentence or a paragraph.

In image generation, prompts can be used to guide the model (DALLE-2, Stable Diffusion) to generate images of a specific type or style. For instance, a prompt can be used to generate images of cats or dogs or to generate images in a particular style, such as impressionism or cubism.

Overall, prompts are a crucial part of generative AI because they provide a means of controlling the output of the model and ensuring that it generates content that is relevant and useful for a particular application or task.

- Instruction – the task or activity that needs to be completed. Frequently used phrases include writing, summarize, answer, create, extract, etc. The instruction can also be much more detailed to describe how the model should achieve the result.

- Input/Payload – additional text, image, or any other input, that the model should work on. For example, a text that we want the model to summarize or a tweet, for which the model should analyze the sentiment.

- Context – additional description of the role that the model should play to fulfill the task, or more details about the task itself, for example: “you are a marketing expert specializing in SEO and positioning…”

- Examples/Output instructions – we can help our model to achieve better results when giving it a few examples to learn the pattern (see more in the part about few-shot learning below). We can also specify an exemplary output structure, such as a specific JSON file structure, in which we want to extract information from the input or other sources.

Depending on the specific task, we want the generative model to complete, and the type of the model, we can use all or some of the above–mentioned elements. They apply especially to the text-to-text generative models. In the case of text-to-image or other types of generative models like text-to-video or text-to-3D, there can be additional aspects worth paying attention to as well, such as lightning, perspective, style, etc.

When talking about creating prompts, we have a few methods at hand, which can be used in different scenarios. The basic prompting techniques include: Zero-shot learning, few-shot learning, and model fine–tuning.

Making sure that we analyze all possibilities that this technology can bring to solving our business issue, mixed with the profound knowledge of its current limitations and techniques to mitigate them, can allow us to very quickly build stunning business solutions.

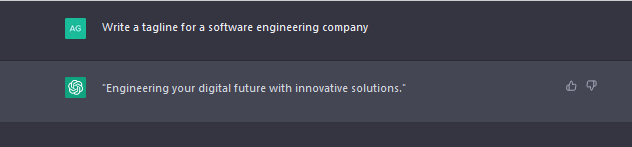

Zero-shot learning

The first of them is called zero-shot learning. In this method, we give an instruction or a question to the model and expect the answer without giving it any examples or patterns of what we would like to achieve. This is the simplest of the methods, and usually, the one we start with when working on a new task.

Examples of such methods are the below prompts:

- What is the diameter of Earth?

- Create a summary of the below text: [Text]

- Generate a catchy slogan for an online marketing agency

- Translate German to French: Bier =>

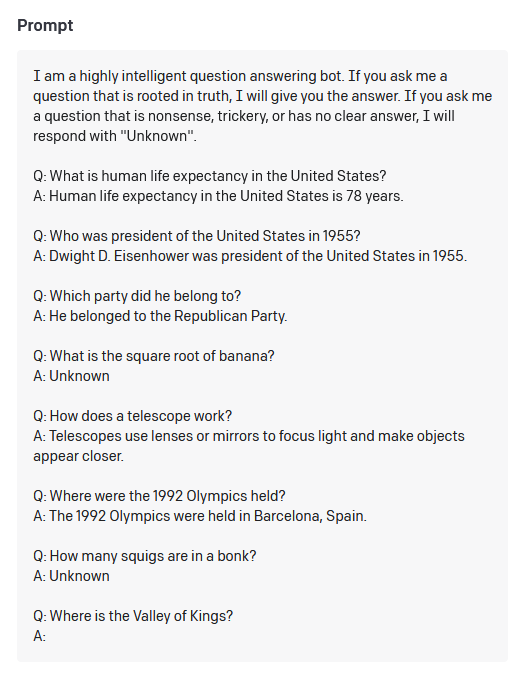

Few-shot learning

In this method, apart from the task description, we provide the model with a few examples of the task. This method is using in-context learning, meaning that we “train” or better steer the model to achieve expected results. It is recommended to use it for more complex tasks when zero-shot learning is not enough. Sometimes we can also encounter one-shot learning, which uses just one example, instead of a few.

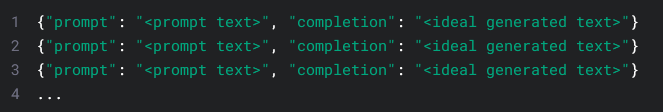

Model fine-tuning

Some of the models, such as the GPT model family, give us additional options for fine-tuning the models specifically with our examples. Even though it’s not directly a prompting technique, it is worth mentioning here, as a next step of complexity when zero and few-shot learning approaches were not enough to achieve our goals. In this example, we use a separate API in which we provide a prepared dataset of prompts and completions (correct answers to the prompts), based on which the model learns to adapt to our specific use case. Usually, it requires 1000s of task-specific examples to take the desired effect. In this method, we are not limited by the maximum prompt and response length, which is the case for few-shot learning.

While prompts may seem simple, they play a critical role in the effectiveness of applying the Generative AI models to our scenario. The quality and specificity of prompts directly affect the accuracy and relevance of AI-generated results. Poorly designed prompts can lead to biased or irrelevant results, while well-designed prompts can significantly improve the accuracy and relevance of AI-generated outputs.

Let’s consider the below very basic examples of a good and a poor prompt:

Good Prompt: “What are the symptoms of the common cold?”

- This prompt is specific to the task at hand and relevant to the data set.

Poor Prompt: “Tell me everything you know about healthcare.”

- This prompt is too broad and unspecific, making it difficult for the AI model to provide accurate and relevant results.

There is no one-fits-all rule on how to generate the best prompts, but there are a few hints, which in most cases lead us to achieve good quality results:

- Start simple and add more details in the next iterations. Remember that prompt engineering is an iterative process and usually requires many experiments to achieve optimal results.

- Following up the simplicity rule: start with the zero-shot approach, if the results are not satisfactory use few-shot learning, and as the next step fine-tuning.

- Be very specific about what you would like to achieve. In general: the more detailed the instruction the closer the results will be to what you want to achieve. This is particularly interesting in the case of text-to-image models such as Stable Diffusion, where the exact description of the lightning or even the parameters of the camera lens, can make a big difference in the quality of the generated image.

- If you want to get output in a specific format, use examples of the structure in the prompt.

- Read the documentation and follow prompting hints specific to a model. For example, in the case of the GPT-3 model, when working with other languages than English, it is advised to write the prompt in English and ask for a response in the language of choice, while keeping the input text in the original language.

- Be aware of the limitations of your Large Language Model of choice and adapt your prompting strategy accordingly. For example, pay attention to the maximum number of tokens per request (4096 in the case of GPT 3.5).

When deciding to build our solutions based on the large language models and Generative AI, apart from the great potential for solving even very complex problems, we also must be aware of the risks and safety practices connected with them.

A few considerations include:

- Bias – Large Language Models (LLMs) can be biased based on the data they are trained on, which can lead to unfair or inaccurate responses. It’s important to validate the quality of the LLMs for our use case and minimize the bias by using e.g. right prompting techniques or fine-tuning.

- Accuracy – LLMs models are not always accurate, and they can make mistakes or generate responses that are not relevant to the prompt. It’s important to test and validate the model before deploying it in a production environment.

- Model Hallucinations – following up on the Accuracy point, one of the most important challenges when working with LLMs are situations in which the AI generates false responses in a very convincing manner. We can work against them for example by adapting the model’s parameters, preparing robust prompts, or providing a reliable source in the context of the model.

- Data privacy – when building the prompts, we need to be aware of how exactly the data that we use as input will be processed, especially when it concerns personally identifiable information or any other sensitive data. For example, the data used when talking with ChatGPT can be used for further model retraining – which means that we have to be careful not to use any PII in order to be compliant with GDPR and other relevant regulations.

As Generative AI is a very fast-changing field, it’s crucial to be constantly up to date with the new developments, both to take advantage of the new opportunities connected with the new models and new features, but also with the newly detected risks. One of the more advanced topics connected with the security of LLMs are also prompt injections or more broadly adversarial prompts, which we need to be aware of when building Generative AI based solutions.

In this article, we presented to you the basic concepts connected with prompt engineering. Creating well-designed prompts is one of the ways we can effectively use Generative AI models.

When building effective Generative AI solutions, we should also consider various different aspects, such as using the right model, its parametrization, or more advanced prompting techniques, such as chain-of-thought prompting. The more advanced scenarios include applying the Generative Models in combination with own company data, which creates immense added value to applications of Generative AI in business. Follow us to get to know more about Generative AI.

If you are looking for an experienced partner for implementing an effective Generative AI solution adapted to your business and your data, get in touch with us.