Diffusion Models – how to create stunning images with generative AI

Artificial intelligence (AI) is becoming more and more popular because it is taking part in almost every area of our lives, and this trend has been noticeable for the last few years. However, some time ago, interest in artificial intelligence increased significantly because of prompt engineering. The revolution took place in both text (with models and solutions such as ChatGPT and GPT-4) and image (Stable Diffusion, DALL-E 2) generation. In this article, we want to present to you the most important facts and methods connected with the image generation field (diffusion models). All of the images included in this article were generated with Stable Diffusion models.

Diffusion models are generative models, which means they are trained by attempting to generate images as close as possible to the training data. The training process involves adding Gaussian noise to the data and learning how to recover data by the denoising process. During the inference stage, the model uses randomly sampled noise to generate results.

Input noise and result generated by a diffusion model.

Using this technique, we are capable of creating almost infinitely different images based on text prompts. We can take advantage of the diffusion models in many different ways. The most known example of using generative AI models in image creation is art (with AI even winning art competitions), but they can also be used as inspiration for graphic designers and create images and even videos for advertisements, book illustrations, marketing materials, or input for business presentations.

How to use Diffusion Models?

Diffusion models generate images based on a prompt (kind of a command given to an AI model, describing in human language what we want the model to generate), and it is crucial to create a proper prompt to achieve the desired effect. A good quality prompt should include three components: frame, subject, and style.

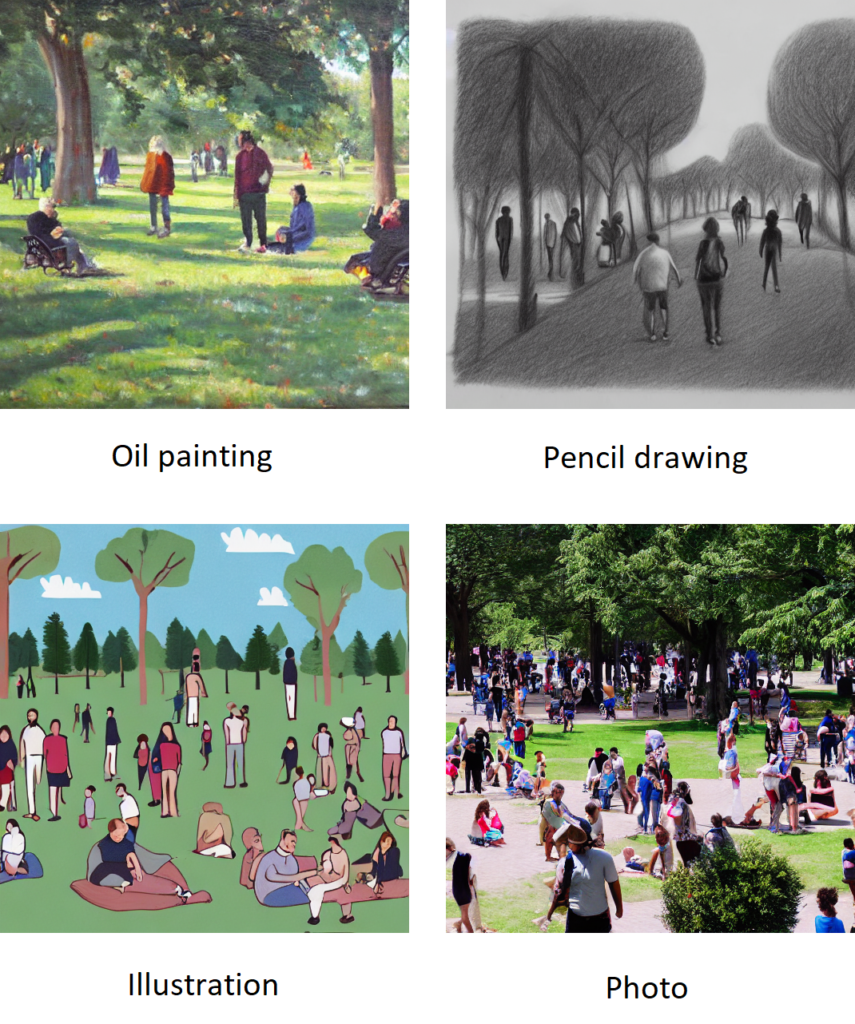

- Frame – Frame refers to the type of generated image. Examples of frames include a photo, a poster, an illustration, an oil painting, a pencil drawing, or a 3D cartoon. If the frame is not specified, the image will be created with the default type, which is a picture. Below, you can see the results created with the prompt “People in the park,” but with different types of frames.

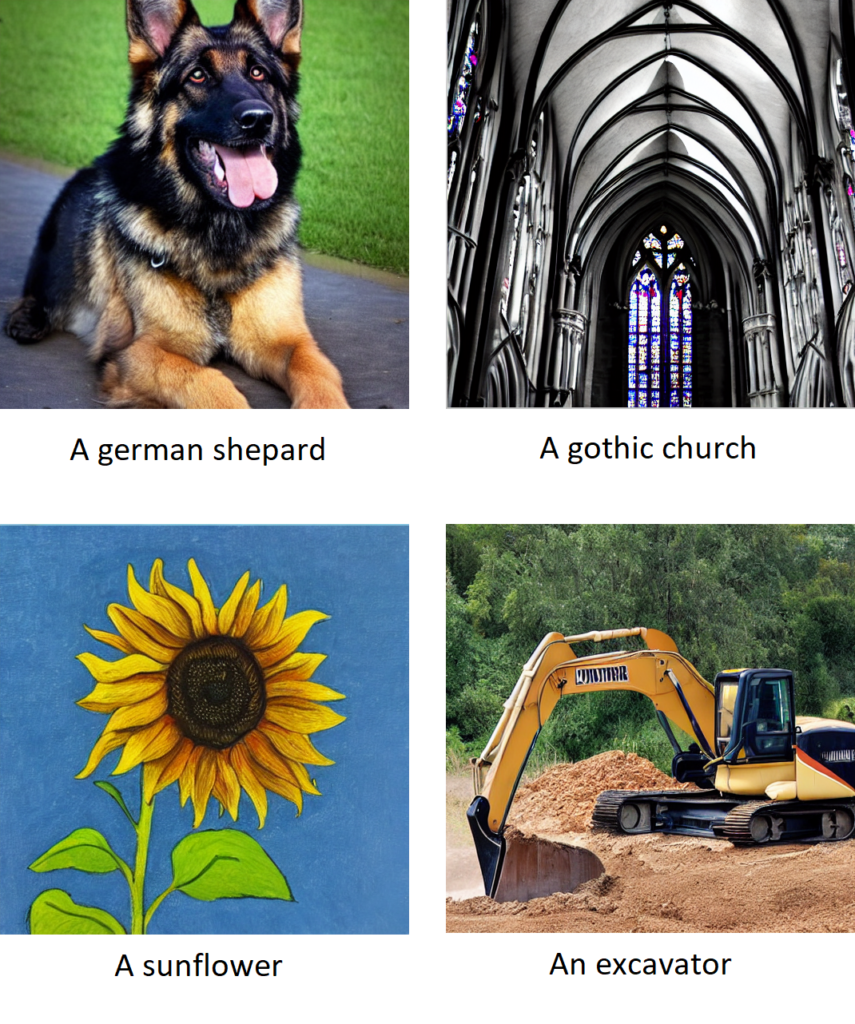

- Subject – the main object of the generated image, it is the most important part of the prompt. Diffusion models have been trained with millions of images, so they should be able to generate proper results even for very specific prompts.

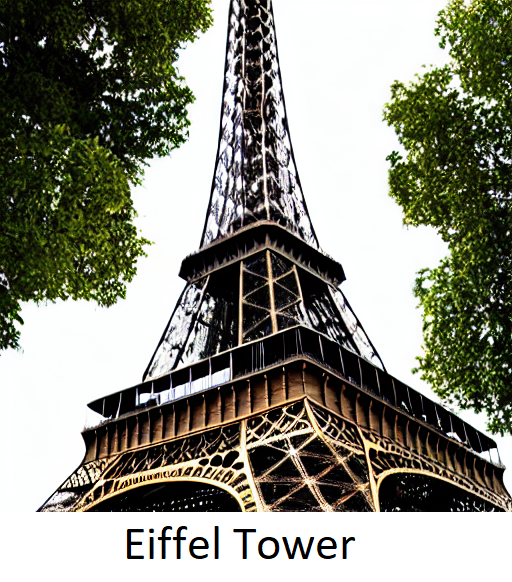

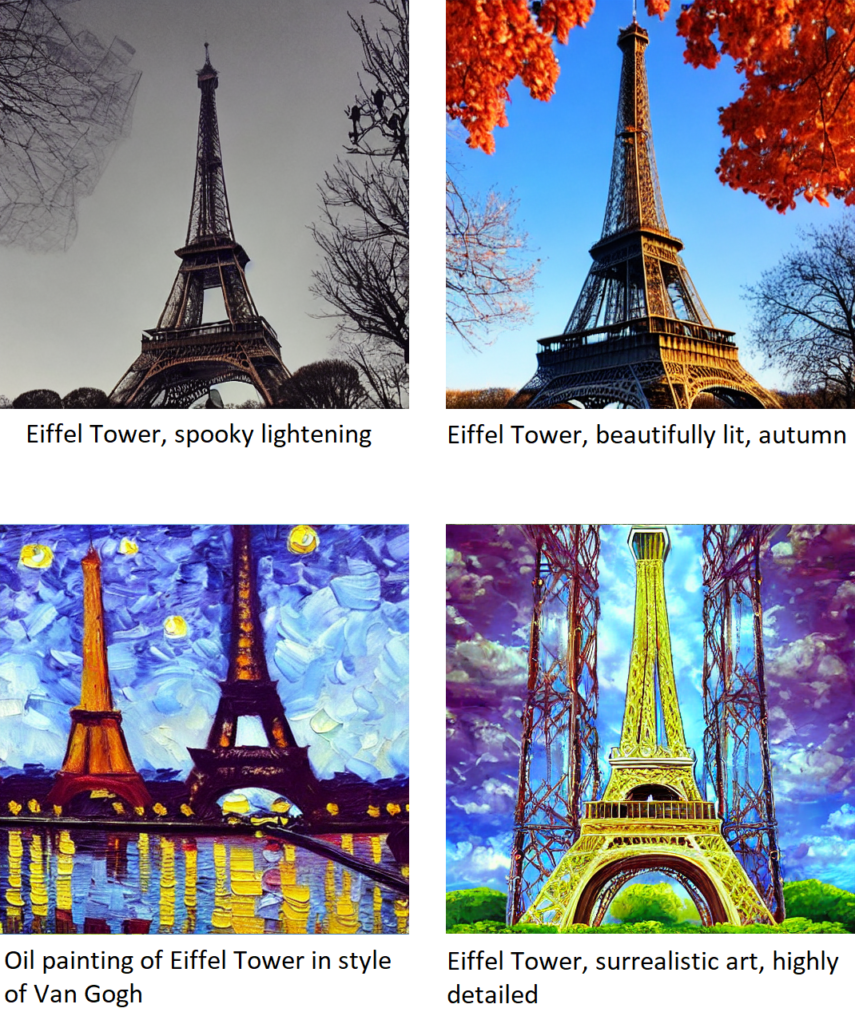

- Style – refers to the style of the image, including factors such as lighting, theme, period, and art style. The more details provided about the style, the better the diffusion models can visualize people’s imagination. Again, it all comes down to the prompt. Let’s try an image of the Eiffel Tower.

And Eiffel Tower with applied different styles:

Problems connected with Diffusion Models

Diffusion models are powerful tools, but they are not perfect. There are some limitations, which we need to take into consideration when building solutions based on them.

- Too long subject part – if the subject part is too long and describes several different items, the model probably will omit the last few things. The best way to ensure that the model creates an image including all of our subjects is to limit the subject to one or two items. Another conclusion from this rule is to place the most important part closer to the beginning of the prompt.

Prompt: ,,Oil painting of the beautiful landscape with herd of horses, small house in the background, and waterfall, sunny day, happy light”

The result doesn’t contain house, but when we change the order a little to: ,,Oil painting of the beautiful landscape with waterfall, small house in the background and herd of horses, sunny day, happy light,” we may get an image with a house included.

This time the model omitted horses in the generated image.

- Generating weird-looking faces – models are capable of generating realistic-looking faces, but at the moment of writing the article, they have problems with generating a few faces in one image.

Here is the result of the prompt: ,,A photo of the man with beard and glasses, highly detailed, real life, high quality”

But if we increase the number of people, the faces become distorted: ,,A photo of six people during office meeting, highly detailed, real life, and high quality”

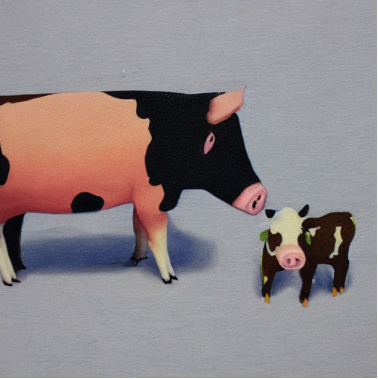

- Fusing two subjects into one – Sometimes when the prompt includes two less common subjects from the same thematic group (like animals), the diffusion model may generate fused objects instead of two separate subjects.

The output image of prompt: ,,A cow and a pig”:

The output image includes two animals, but they look more like a hybrid of a cow and a pig. The problem usually disappears when we try with very popular subjects – “A cat and a dog”

- Generating text on image – Generating text on an image can be challenging for diffusion models, as they often struggle with placing the text correctly. The resulting image may include the text, but with added or disordered words that make it difficult to read or understand:

Diffusion Models pipelines

- Text to image – This pipeline relies on generating an image based solely on a text prompt. All of the above examples were generated with this pipeline, which is the most basic way of utilizing diffusion models.

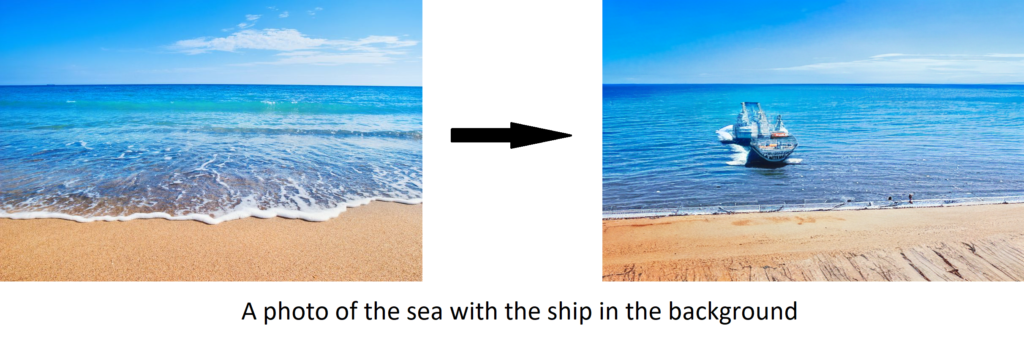

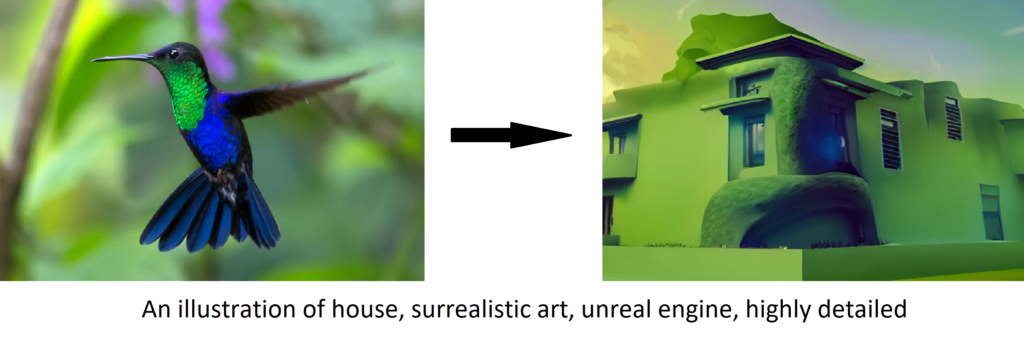

- Image to image – This pipeline involves using both an image and a text prompt to generate another image. The input image serves as a base for the output image, and the user can control the degree of similarity between the two. One potential use case is simply editing the input image:

It can also be used to generate images from sketch:

Or just to reuse the color palette from the input image:

Diffusion models fine-tuning

A very important feature of diffusion models, when thinking about their business applications, is the possibility of retraining models with custom data. After fine-tuning, users can generate images with their own subjects, such as a person, animal, product, or company logo (although there can be problems with generating text). This property is essential for many business applications which rely on generating customized marketing and sales materials, including company-specific pictures and objects.

A very quick proof of concept allowed us to generate a few different variations of our logo, using only three pictures for retraining:

As you can see, the results are not perfect, but the model was able to capture the main features from just three training images. With more training data, it has the potential to create interesting and visually appealing company materials.

Summary

In this article, we have presented the most important information about Diffusion Models. As you can see, it is a powerful tool, and the main limitation of Diffusion Models is our imagination.

This field of Artificial Intelligence (AI) is intensively developed and in the near future, the problems mentioned above will probably be eliminated, which will increase the power of Diffusion Models.

Use diffusion models in your company

At theBlue.ai, we are committed to utilizing the capabilities of diffusion models to provide groundbreaking solutions for various industries and businesses. Our team of experts is constantly researching and developing new approaches to maximize the potential of generative AI models for our customers. We believe diffusion models have the potential to revolutionize the way businesses operate, and we look forward to being at the forefront of this rapidly evolving field.

If you are interested in exploring the potential of diffusion models for your business, please don’t hesitate to contact us. Our team of experts would be happy to discuss how we can help you create innovative solutions using this powerful technology and to create a quick proof of concepts allowing you to internally prove the value of generative AI for your company and your products.